KUBERNETES:

In the quest to further abstract the technology stack and help developers deploy and scale their applications better than ever, I present to you Kubernetes. Kubernetes is a leader in the technical segment of container orchestration and is designed to be run on many types of platforms and runtimes. As one of the top contributed to projects on GitHub, Kubernetes is an open-source wonder having spun off from the Borg project at Google. Kubernetes is written in Golang and is used by companies such as Capital One, eBay, and Spotify.

A key ingredient to the way that Kubernetes handles loads and scaling is the concept of containerization of environments. Containers are a clever way to bundle together the software and dependencies required to run a service repeatedly and reliability. If configured correctly, containers also lend themselves well to auto-scaling. This allows for repeated deployment without having to worry about changes to the runtime or changes to the code base or dependencies. Containers can be hosted in repositories and pulled down when needed.

Containers oftentimes get confused with the concept of a virtual machine. The key difference between these two virtualization technologies is the concept of a shared kernel. Where virtual machines are deployed having separate user spaces and separate kernels, containers share kernels while keeping their processes and user spaces separate.

A very popular containerization tool is called Docker. Docker bundles together the code required for building and running applications and creates an image which can be deployed in the same state repeatably. Docker is the containerization tool that Kubernetes most often takes advantage of.

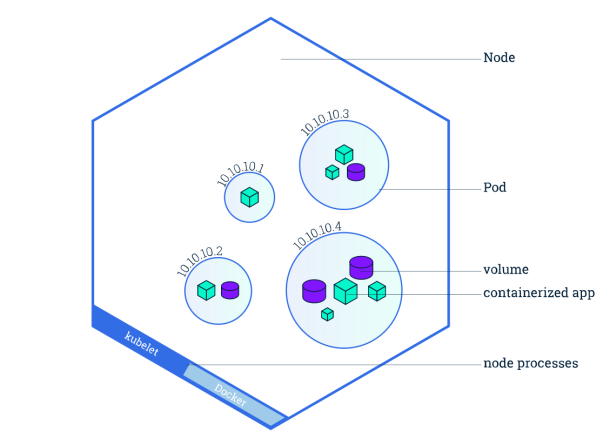

Kubernetes takes advantage of these characteristics of containers in order to perform tasks at scale. The architecture of Kubernetes is broken down into a few key pieces which make up a Kubernetes Cluster.

Source: Nodes and Pods Image

Source: Nodes and Pods Image

Pods:

A pod is a grouping of one or more containers that share storage and network resources. Each pod also has a specification about how to run the containers it controls. These containers within a pod are able to find each other via a localhost connection. In order to directly talk to other containers in different pods, the configuration needs to be setup specifically to allow this. In many ways a pod is similar to an abstracted version of a machine host.

Pods allow easier management and simplify deployment. One pod deploys multiple resources and can be deployed repeatably to accomplish the same or similar tasks at scale. Pods take advantage of many of the underlying advantages of infrastructure as templates.

Nodes:

A node is also referred to as a minion (or sometimes worker). This is the machine that the containers and pods are deployed on top of. The node needs to have a runtime installed such as Docker. Each node is managed by the Kubernetes master and each node can contain multiple pods.

Two important parts of the node are the Kubelet and the Container runtime. The Kubelet takes responsibility for communicating between the Kubernetes master and the node. The runtime pulls down the container repositories from an image registry (like Docker Hub), unpacks the container, and runs the application.

Kubernetes Master:

The Kubernetes Master is a specialized node which takes responsibility for the overall coordination of the cluster. It runs three main processes. These are the kube-apiserver, the kube-controller-manager, and the kube-scheduler. The kube-apiserver handles the REST operations for the cluster. The kube-controller-manager keeps a virtual eye on the cluster’s current state and takes actions to bring it the desired state if things get off track. The kube-scheduler has a large impact on the availability, performance, and capacity of the cluster as it accounts for the current state and schedules changes accordingly.

Takeaways:

Each of these components of a Kubernetes cluster is critical to the success of the container orchestration. Containers can be spun up as demand increases, some level of resiliency can be had with multiple pods running on separate nodes.

Kubernetes may not be the tool of choice when it comes to running a singular process, but when you have a fully fledged application running on multiple languages, each with their own separate dependencies and runtime requirements, each referencing a series of databases and requiring specific permissions, a Kubernetes cluster is definitely an viable option to consider.

Learn more from this 5 minute introduction to Kubernetes from VMWare: